A few years ago, it was hard to imagine how AMD would have survived without re-entering the datacenter with its CPU and GPU compute engines. And now, it is hard to imagine how the chip maker could have possibly thrived without a revitalized GPU compute engine business.

Intel knows a thing or two about this, as we have discussed recently, but even Intel says it will benefit later this year from the generative AI wave with its Gaudi line of matrix math accelerators, which have just been upgraded with their third generation.

AMD is doing a whole lot better than it ever has with datacenter compute engines, which is something that many of us are not yet used to. Intel still has a bigger server CPU business than AMD, but AMD arguably has a stronger one. But as we have said before, we think that the datacenter CPU market will settle out with a cut-throat battle between Intel and AMD, with each having somewhere around 40 percent share of CPU sales and various Arm processors – some of them homegrown and used by the cloud builders and hyperscalers that comprise more than half of the market – and things like IBM mainframe and Power and emerging RISC-V CPUs comprising the remaining 20 percent.

We also think that the X86 architecture will be the new legacy platform, and that Intel and AMD have no desire to compete directly with emerging Arm chips. They know a lot of enterprise code is written for X86 platforms and that this is not going to change quickly. Just ask IBM, which is still selling plenty of System z mainframes and Power Systems based on its own chips.

AMD’s continuing rise in the datacenter, despite a server recession outside of massive investments in GPU-accelerated AI servers, is still a thing to behold. It is not as dramatic as the rocket rise of Nvidia in the datacenter, but it is a reasonably close second.

In the first quarter ended in March, AMD’s overall revenues were only up 2.2 percent to $5.47 billion, and fell 11.3 percent sequentially, which is more or less normal for a Q1 in any year. AMD posted a $123 million net income, which was a considerable improvement over the $139 million loss it posted in the year-ago period.

AMD exited the quarter with $6.04 billion in the bank, which is most assuredly a comfort to chief executive officer Lisa Su but is one-quarter the cash hoard that Nvidia has and which will probably be one-tenth the size as Nvidia gets to the end of this calendar year. We are projecting that Nvidia will break through $100 billion in sales in fiscal 2025 (roughly analogous to calendar 2024) and will possibly have $75 billion in cash in the bank by year’s end.

Nvidia is not getting easier to compete against, but more formidable. But there is always room for a second, staunch competitor – and the market most definitely wants an alternative to the Nvidia stack to drive up competition and to drive down costs.

In the March quarter, AMD’s Data Center group had sales of $2.34 billion, up 80.5 percent year on year and up 2.4 percent sequentially in what is usually a down quarter for datacenter spending and what is still a weak server CPU market. (Well, for Intel anyway.) Operating income exploded for the Data Center group in Q1, despite the relatively high cost of making Instinct MI300 series GPUs and despite the fact that hyperscalers and national HPC labs both argue for and receive very aggressively low pricing on their compute engines, putting pressure on margins for all compute engine makers who sell to them. To be precise, AMD’s operating income in the Data Center group was up by a factor of 3.65X to $541 million, and this was mostly due to the “Genoa” and “Bergamo” Epyc 9004 upgrade cycle and rising average selling prices as companies buy CPUs with a large amount of cores.

As we have been arguing for a while, keeping servers in the field longer means buying more top bin parts to stretch the useful life of the machines, and this seems to be playing out. Paying the premium to get a top bin part is now worth it, and it wasn’t when hyperscalers and cloud builders were only using machines for three years.

We don’t care about this except inasmuch that success outside of the datacenter means there is less pressure on the datacenter for AMD, but its Client group, which makes CPUs, APUs, and GPUs for PCs, turned in its third quarter of not completely awful, with revenues up 85.1 percent and posting an $86 million operating income, a whole lot better than the $739 million in sales and $172 million operating loss in the year-ago period.

Gaming GPUs took it on the chin in Q1 2024, with sales off 47.5 percent to $922 million and operating income down 51.9 percent to $151 million. The FPGA line expressed in the AMD Embedded group is at the beginning of a new product cycle, with the initial Versal 2 devices being announced a few weeks ago, and it is down 45.8 percent to $846 million, with operating income down 57.1 percent to $342 million.

Epyc server CPU sales are making up for these revenue and operating income declines to a certain extent, but AMD must be unhappy that the Gaming and Embedded groups are not doing better.

In our financial charts, we go back to Q1 2015, which is when AMD laid down the gauntlet and promised to get back into the datacenter market and earn the respect and trust of hyperscaler, cloud builder, HPC center, and enterprise customers once again after basically ceding compute engines to Intel in 2013 or so.

Back then, AMD made more money from datacenter GPUs than it did in datacenter CPUs – and it was not very much money in either case, as you can see in the chart above. AMD started getting traction in CPUs and GPUs back in 2017, and Epyc CPUs took off in 2018 and grew sharply until the server recession hit hard, thanks in large part to a curtailing of spending by hyperscalers and cloud builders, in Q1 2023. For AMD at least, which has once again become the darling of the hyperscalers and cloud builders and has made inroads again with HPC centers, the server CPU recession looks to be mostly over.

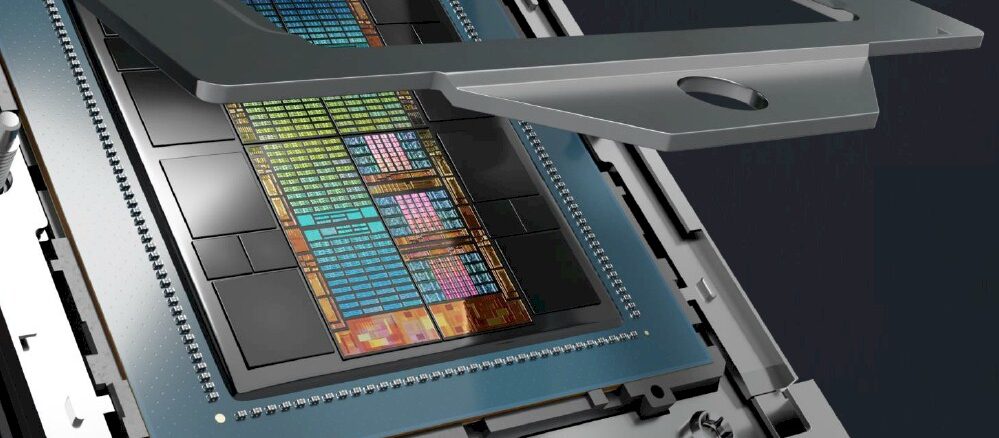

And, as you can plainly see, Instinct GPU sales, thanks to the “Antares” MI300 family, the third time charm for AMD’s datacenter GPUs. The MI300s can stand toe-to-toe with what Nvidia can ship today, which is “Hopper” H100 and H200 GPU accelerators.

The MI300A hybrid CPU-GPU device that is part of the 2+ exaflops “El Capitan” supercomputer being tuned up right now at Lawrence Livermore National Laboratory and to the MI300X all-GPU devices that are being installed by hyperscalers (particularly Microsoft, Google, and Meta Platforms, if the rumors are right), are up like an Nvidia rocket.

Right now, the MI300 is a drag on profits and is not yet at the level of the operating profits averaged across all datacenter products, but AMD is working on that. AMD said on the call that in Q4 2023 and Q1 2024 combined, it sold more than $1 billion in MI300 GPUs. Our model put GPU sales at $415 million in Q4 2023 – driven mostly by the MI300As going into the El Capitan machine – and we surmise that AMD sold about $610 million in MI300s in Q1 2024 – driven mostly by MI300X devices being sold into the hyperscalers and cloud builders. That $610 million of GPU revenue in Q1 2024 is 47 percent higher sequentially and a factor of 9.4X higher year on year – not just hitting it out of the ballpark, but hitting it out of the parking lot behind the ballpark. In the next two quarters, AMD will hit it out into the river next to the parking lot. . . .

If you make some assumptions and do the math, as we do in our model, we think AMD had around $35 million in DPU sales and maybe $65 million in datacenter FPGA sales, which leaves $1.63 billion in Epyc server CPU sales, which is down 8.1 percent sequentially but up 40.5 percent year on year. Our model shows that hyperscalers and cloud builders acquired $1.15 billion in Epyc CPUs, up only 27.9 percent year on year, but enterprises accounted for the remaining $472 million in Epyc CPUs, up 85.2 percent.

AMD has not provided much in the way of CPU forecasting, but since last fall, AMD has been putting out guidance on GPU sales and in the Q1 call it raised that guidance for the second time, now saying that it will break $4 billion in Instinct CPU sales in 2024.

“The MI300 ramp is going really well,” Su said on the call with Wall Street analysts after the numbers were put out. “If we look at just what’s happened over the last 90 days, we have been working very closely with our customers to qualify MI300 in their production datacenters, both from a hardware standpoint and a software standpoint. So far, things are going quite well, and what we see now is just greater visibility to both current customers as well as new customers committing to MI300. So that gives us the confidence to go from $3.5 billion to $4 billion. And I view this – as you know very much – as a very dynamic market, and there are lots of customers. We have over a hundred customers that were engaged with in both development as well as deployment. So overall the ramp is going really well. As it relates to the supply chain, actually I would say I’m very pleased with how supply has ramped. It is absolutely the fastest product ramp that we have done. It’s a very complex product.”

Here is the history of the forecasts as they changed and how we think the quarters would line up against those forecasts:

We think that the best-case scenario we put together in January of this year is still a very possible outcome for the GPU business at AMD. Su was clear, once again, that AMD is not supply constrained at that $4 billion number, any more than it was at that $3.5 billion number. AMD’s potential supply is always pegged at being larger than its forecast. That said, Su said that AMD was absolutely supply constrained in Q1 and could have sold more GPUs if it had them.

As the manufacturing process for GPUs improves and AMD makes enough to sell to Tier 2 service providers and enterprises (who do not get the most aggressive pricing on any chips), the profitability of those GPU sales will improve even faster. And as the “Turin” Epyc 9005 chips based on the Zen 5 family of cores comes out later this year, the CPU story will only get better, too.

All within the confines of a re-emerging Intel and the onslaught of homegrown Arm server CPUs and homegrown AI accelerators among the hyperscalers and cloud builders. This is the real threat to – and governor on – the AMD datacenter compute engine business.

But for now, AMD is prognosticating that its Data Center group will have growth in the second quarter of 2024 of “strong double digits” and that both CPU and GPU sales will rise year on year. And the server market itself is expected to improve in the second half – something that Intel has talked about as well. We shall see. We are well aware that there is a lot of older iron out there in the server fleets of the world, but we also know running them for a quarter or two more is not going to hurt much and will leave that much more dough to apply to AI proofs of concept and actual buildouts.

Sign up to our Newsletter

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now