This deep dive takes a look at the results of the DevQualityEval v0.4.0 which analyzed 138 different LLMs for code generation (Java and Go). LLama 3 is gearing up to take GPT-4’s throne, the Claude 3 family is great with Haiku being the most cost-effective, and Mistral 7B could be the next model of choice for local devices.

The results in this post are based on 5 full runs using DevQualityEval v0.4.0. Metrics have been extracted and made public to make it possible to reproduce findings. The full evaluation setup and reasoning behind the tasks are similar to the previous dive. For up-to-date results, check out the latest DevQualityEval deep dive.

Table of contents:

- Comparing the capabilities and costs of top models

- Costs need more than logarithmic scale

- LLMs' chattiness can be very costly

- Over-engineering of code and useless statements

- Open-weight and parameter size matter

- What comes next? DevQualityEval v0.5.0

The purpose of the evaluation benchmark and the examination of its results is to give LLM creators a tool to improve the results of software development tasks towards quality and to provide LLM users with a comparison to choose the right model for their needs. Your feedback guides the next steps of the eval. With that said, let’s dive in!

Comparing the capabilities and costs of top models

The graph shows the best models in relation to their scores (y-axis, linear scale, weighted on their test coverage) and costs (x-axis, logarithmic scale, $ per million tokens). The sweet spot is the top-left corner: cheap with good results.

Reducing the full list of 138 LLMs to a manageable size was done by sorting based on scores and then costs. We then removed all models that were worse than the best model of the same vendor/family/version/variant (e.g. gpt-4-turbo is better than gpt-4 so the latter can be removed). For clarity, the remaining models were renamed to represent their variant (e.g. just gpt-4 instead of gpt-4-turbo). Even then, the list was immense. In the end, only the most important new models (e.g. llama-3-8b and wizardlm-2-7b), and fundamental models with at most the last two versions for comparison (e.g. command-r-plus and command-r) were kept.

The above image has been divided into sections with lines to further differentiate:

- Scores below 45 (not on the graph): These models either did not produce compiling code or were simply not good enough, e.g. produced useless code. They are not recommended and need serious work for the evaluation’s tasks.

- Scores up to 80 (bottom): These models returned source code for each prompt 100% of the time. However, that code often did not compile, e.g.

gemma-7b-ithad only 3 out of 10 instances that compiled, but these 3 instances reached 100% coverage. These models require deeper work to fulfill the main objectives and are not recommended unless manual rewrites are acceptable. - Scores up to 110 (middle): These models reached the necessary coverage at least half of the time, returning code that tends to be more stable and non-overengineered. It could be that more fine-tuning is all that these models need, e.g.

command-r-plusreached almost perfect results for Java but consistently made the same mistake of wrong imports, leading to 0% coverage for Go. - Scores starting with 110 (top): These models are dependable for the major objectives and are recommended for generating tests for Java or Go. More fine-tuning leads to better scores, e.g.

wizardlm-2-8x22bgreatly outperformsmixtral-8x22b. For the most part, selection in this section is a matter of costs, e.g.gpt-4-turbowith $40 per million tokens vsllama-3-70b-instructwith $1.62 are vastly different in cost.

Putting all this information together gives us the following outcome (for now):

gpt-4-turbois the undisputed winner when it comes to capabilities with a 150 out of 150 score but at a high cost.llama-3-70bis the most cost-effective withwizardlm-2-8x22bsecond andclaude-3-haikuthird. That’s our triangle of love in the “cheap with good results” corner.

However, there are multiple flaws at work! The king of LLMs could be still dethroned with this evaluation version!

During the hours that we spent looking at logs of the results, improving reporting for better insights on all the data, and of course, contemplating which emojis should be used on the header image of this blog post, we noticed a bunch of problems that affect all evaluation benchmarks of LLMs. The following sections discusses these problems as well as other findings, and how they (might) be solved in the next version of the DevQualityEval: v0.5.0. If you found other problems or have suggestions to improve the eval, let us know. With that said, let’s jump into the main findings.

Costs need more than logarithmic scale

When we started creating this evaluation benchmark, we knew one fact: costs should be an important aspect of every benchmark. Arguably, costs can be as important as time: the best result can be useless if it takes a year to compute. For example, we learned with our own product that every editor action needs an appropriately fast reaction. Think of an auto-complete that takes a minute to show - horrible! However, analyses outside of an editor can take even hours when they bring good results. The same logic is applied to costs.

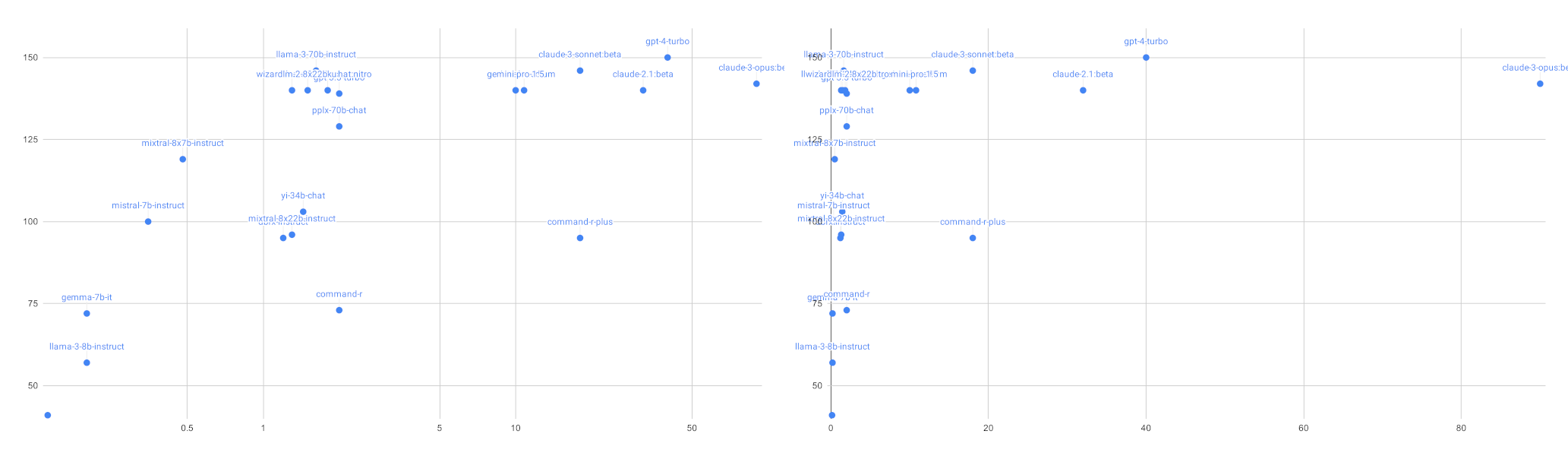

However, costs of LLMs are a completely different breed of cost-monsters. Look at the following graph (like before, scores are represented on the y-axis while the x-axis shows costs) where you have costs on the left side in logarithmic scale, and on the right in linear scale:

With the logarithmic scale, we have a nice graph where labels are almost perfectly spread to be readable. If you do not look at the numeric ticks of the axis, you never know how costly the models on the right are.

With the linear scale, you can clearly see that

llama-3-70b-instruct (top-left corner) is cheap and good

claude-3-sonnet:beta is on the same score-level but more expensive

gpt-4-turbo even doubles the price but it has just a slightly better score.

However, we are not done yet, because on the far right claude-3-opus:beta doubles again the price of GPT-4 but it is not as good in score. With a logarithmic scale, these facts are very hard to see and consider. Of course, you could say that this is a problem of the evaluation and/or a problem of interpreting the results/scoring. Admittedly it is, and the next findings will reason on how to improve those problems. Nevertheless, it also shows that costs must be part of an evaluation because even with perfect interpretation and scoring of results, if a model is worse than another in capability and is also way more costly than the better solution, it should have a proportional lower score in the overall scoring.

Scoring that includes costs helps LLM users to make the right choice for their usage.

Can we dethrone the king of LLMs? Yes, factoring in costs,GPT-4 Turbo is much less cost-effective than Llama-3-70B-Instruct which has a good score, useful results, and is extremely cost-effective. That said, we are still not done with our findings.

LLMs' chattiness can be very costly

Another cost factor that must be taken into account of an overall score is “chattiness”, i.e. is the answer to a prompt direct and minimal, or is there lots of explanation? Source code answers also tend to include lots of comments (most often obvious comments) and complex implementations that could be simplified. Let’s first look at the prompt for the Java use case:

Given the following Java code file “src/main/java/com/eval/Plain.java” with package “com.eval”, provide a test file for this code with JUnit 5 as a test framework. The tests should produce 100 percent code coverage and must compile. The response must contain only the test code and nothing else.

package com.eval; class Plain { static void plain() { } }

According to gpt-tokenizer.dev this prompt has 84 tokens which costs about $0.003 with GPT-4. Optimizing the size of our prompt (e.g. how much context we define) and how often we send it (e.g. for auto-completion) is our job. However, there is a key aspect in this prompt: The response must contain only the test code and nothing else. This task needs to be understood by the model and executed. Looking at one of the answer of gpt-4-turbo we see that it does a superb job:

package com.eval; import org.junit.jupiter.api.Test; import static org.junit.jupiter.api.Assertions.*; class PlainTest { @Test void testPlain() { assertDoesNotThrow(() -> Plain.plain()); } }

There is only a code-fence with Java code, and the solution is almost minimal (assertDoesNotThrow is not really needed). Those are 50 tokens so about $0.001. Remember GPT-4 is not cheap but there is an even more expensive model, claude-3-opus:beta, which does an excellent job as well for Java. Here is one of its answers:

Here’s the JUnit 5 test file that provides 100 percent code coverage for the given Java code:

package com.eval; import org.junit.jupiter.api.Test; class PlainTest { @Test void testPlain() { Plain.plain(); } }

Those are 59 tokens (more than GPT-4) which cost $0.00531 so about 5 times more than GPT-4 for a similar answer. The reason is that Claude Opus did not stick to the task or did not understand it: there is additional (unnecessary) text before the code-fence. Of course, such outputs could be removed with better prompting, but still, the model should have understood what we wanted. For that reason we introduced a metric response-no-excess which checks if there is more than just a code-fence for an answer. Some models are much chattier than others. An extreme example is 01-ai/yi-34b (by the way its sibling 01-ai/yi-34b-chat does a much better job) which gives huge answers for Go that do not even fully connect to the task itself. One result has 411 tokens, which is not just expensive but also slower no matter the LLM.

Looking at llama-3-70b-instruct: can we still dethrone GPT-4? Not with this metric. Llama 3 has 60% while gpt-4-turbo has 100% for the response-no-excess metric. That sounds like a huge gap, but consider that the excess is minimal (e.g. Here is the test file for the given code: for one case) and we can write better prompts that could eliminate this problem. Additionally, in applications, you can easily automate ignoring excess content. Therefore, this metric should not take a big role in an overall score, but it is still important since it shows if the model understands the task completely and can highlight overly chatty (and therefore costly) models. More logical coding tasks are far harder than asking to output only the testing code.

Combining the base costs of a model with the response-no-excess and costs for every prompt and response will give LLM users guidance on the cost-effectiveness of LLMs for day-to-day usage. With that, we can say that gpt-4-turbo still has better results, but llama-3-70b-instruct again gives you better cost-effectiveness for good results. However, we didn’t look at the code just yet. On to other findings!

Over-engineering of code and useless statements

Until now, all findings talked about costs, but obviously when running an evaluation benchmark on software development quality, there are two elephants in the room: code and the quality of that code. DevQualityEval version 0.4.0 assesses code like this:

- Take the first code-fence of the response and write it in the appropriate test file. (The test file path differs for Java and Go as well as for other languages and even frameworks).

- Compile the test code. The

files-executedmetric is increased by 1 point if the code compiles successfully. Given the simplicity of the current task and cases, it could be surprising to know that only 44.78% of all code compiled. - Execute all tests using a coverage instrumentation. 100% coverage gives 10 points to Gryffindor 🧙 the

coverage-statementmetric. Only 42.57% of coverage points have been reached, which may sound bad but another view is that 95.05% of all compilable code reached 100% coverage.

Given the current tasks (writing automated tests) it is very appropriate to include code coverage as a metric.

Additionally, we learned that the coverage-statement metric needs to have more weight than other metrics, since beautiful responses are worth nothing when they do not include executable test code that covers the implementation under test. However, quality code and especially the quality of tests isn’t just about being executable and covering statements. Quality is also about precision, engineering and behavior. Clearly, such an assessment should be included in the next version of the DevQualityEval.

This is what we consider a perfect answer for the Java task:

package com.eval; import org.junit.jupiter.api.Test; class PlainTest { @Test void plain() { Plain.plain(); } }

Given the size of the example one would think that there is not much to this, but there are multiple parts to check:

- Is the correct package used, so the test file fits in with its implementation file?

- Does the result only include imports that are used?

- Are modifiers minimal?

- Does the class name follow the common convention for test classes?

- Does the test method name follow the common convention for test methods?

- Is the function under test, a static method, called appropriately without an extra object?

- Are there no asserts because there is nothing to check?

- Is the code style appropriate?

Are you surprised that there are so many things that can be assessed for such a result? Every Java developer that would receive the same task would most likely write the same code when they are not using additional tools, e.g. generators. Developers take care of every detail intuitively because they practice writing tests over and over. The same way LLMs practice. Therefore, we should assess the same details, which is a difficult problem. Look at the following example of gpt-4-turbo:

package com.eval; import org.junit.jupiter.api.Test; import static org.junit.jupiter.api.Assertions.*; class PlainTest { @Test void testPlainMethod() { assertDoesNotThrow(() -> Plain.plain(), "Plain.plain() should not throw"); } }

Notice the following differences:

- Wildcard import for one use

- Spaces vs tabs

- Extra empty line

- Unconventional test method name

- Extra assert that will always be true

- Obvious description for the assertion

Given that most code styles (in this case, spaces vs tabs and the empty line) are handled by formatters, it should be clear that such details should never add points. However, code differences must be assessed and we are implementing the following tooling and assessments to allow for better scoring:

- Compare code with the perfect solution only on the AST level, as most code styles do not apply there.

- Diff code towards the perfect solution on the AST level but allow for multiple perfect solutions if possible. The more the code differs, the fewer points are awarded.

- Apply linters and add one point for every rule that is not triggered. This allows us to find e.g. code that is always false/true like

assertEquals(0, 0, "plain method not called");ofllama-3-8b-instruct:extended.

With these measures, we are confident that we have the next good steps for marking higher-quality code. Looking at gpt-4-turbo we see only one 1 perfect solution out of 10,. In the meantime,while llama-3-70b-instruct has 7, with (one case is very curious because it checks the constructor, which is surprising, but valid!). This makes llama-3-70b-instruct cost-efficient while providing higher quality code. However, this is also where we can introduce claude-3-haiku which has the same coverage score as our disputing kings of the eval, but less total score up until this point. With this new assessment, it has 6 perfect solutions and those that differ are far closer to the perfect solution than what llama-3-70b-instruct produces: this makes claude-3-haiku our new top model that is also cost-effective!

However, there are two more aspects that need to be addressed that cannot be ignored: open-weight and parameter size.

Open-weight and parameter size matter

The king of the last moment Claude 3’s Haiku has one disadvantage that cannot be ignored: it is a closed-source model. Which means that it should not be used for secure areas or for private data. This makes llama-3-70b-instruct again the new king for now.

Another aspect of open-weight models is their parameter size which determines most importantly their inference costs. This makes multiple models instantly interesting to consider: wizardlm-2-8x22b, mixtral-8x7b-instruct or even mistral-7b-instruct which can run on today’s phones. However, continuing these assessments manually is very time consuming. Time is better spent on the next and final section of this article.

What comes next? DevQualityEval v0.5.0

The following chart shows all 138 LLMs that we evaluated. Of those, 32 reached a score above 110 which we can mark as having high potential.

However, this graph also tells a lie since it does not include all the findings and learnings that this article extensively scrutinized about. The next version of the DevQualityEval v0.5.0 will therefore address these shortcomings additionally to the following features:

- Assess stability of results, e.g. introduce a

temperature = 0variant. - Assess stability of service, e.g. retry upon errors but give negative points.

- Introduce Symflower Coverage to receive a detailed coverage report for more complex cases.

- More cases for test generation to check the capability of LLM towards logic and completeness of test suites. We predict that this feature will greatly reduce the amount of well-scoring LLMs.

Make sure you check out our most recent deep dive for up-to-date information and the latest results of the DevQualityEval benchmark.

Hope you enjoyed reading this article and we would love to hear your thoughts and feedback on how you liked the article, how we can improve the article and how we can improve the DevQualityEval.

If you are interested in joining our development efforts for the DevQualityEval benchmark: GREAT, let’s do it! You can use our issue list and discussion forum to interact or write us directly at [email protected] or on Twitter.