Kubernetes as a Scheduler vs. an API Proxy

Trusted by security and data teams around the world

Al breaks your old defenses.

New challenges require intelligent responses

One platform to secure AI - from data to access to action.

At rest, in motion, and in use

The Cyera Advantage

Built for AI. Not for Yesterday.

Speed

Deploy in minutes and surface sensitive data exposure long before legacy tools even finish setup.

Scale

Scan and operate on massive cloud, SaaS, and hybrid data estates with minimal operational overhead.

Trust

Al-native, enriched classification that learns your business and surfaces real risk so teams can act with confidence.

Outcomes

80% less risk in 3 months*

Automated prioritization and guided remediation drives fast, measurable reduction of enterprise data risk.

*Based on a subset of customer examples

Protect and comply at scale by making data and AI security highly actionable.

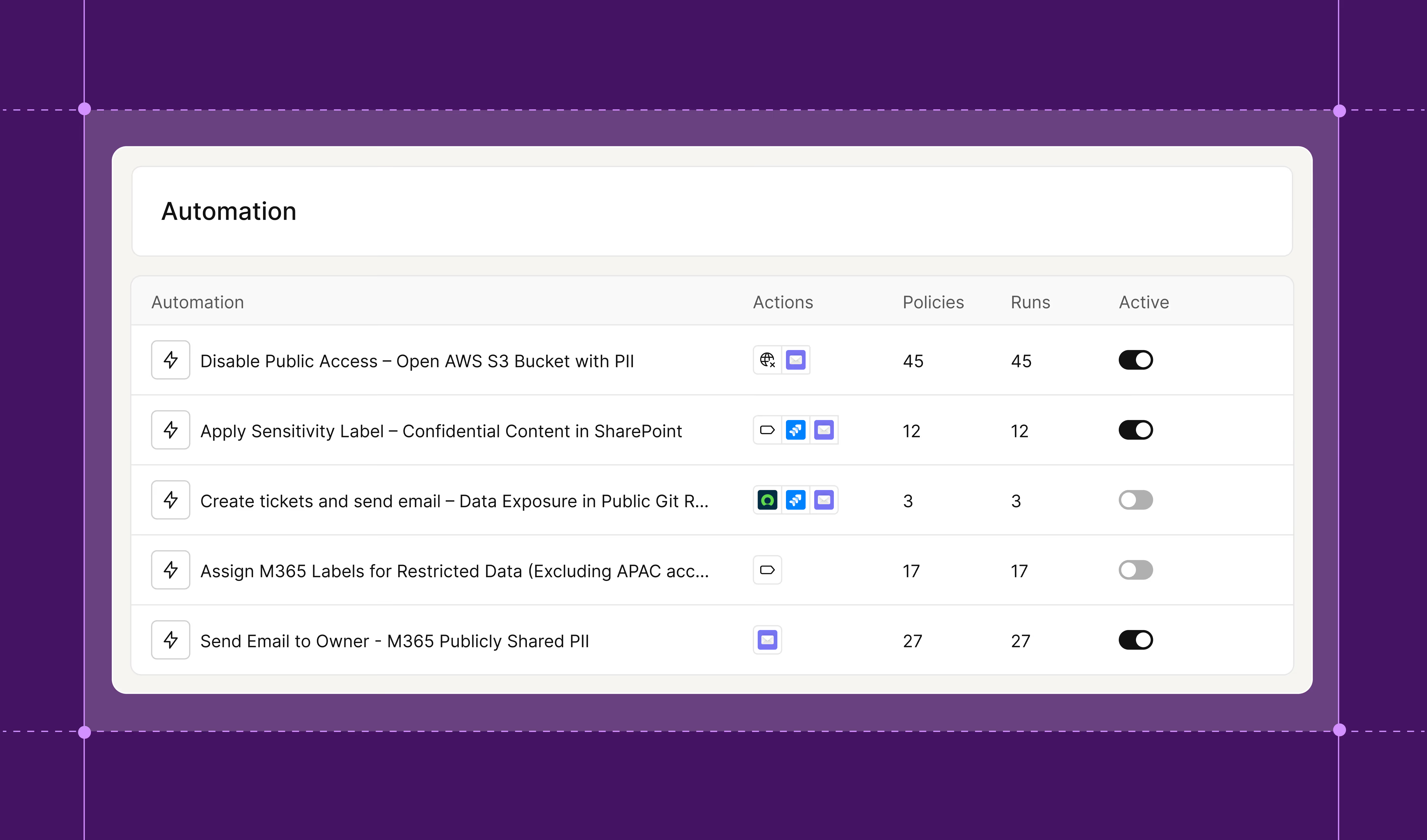

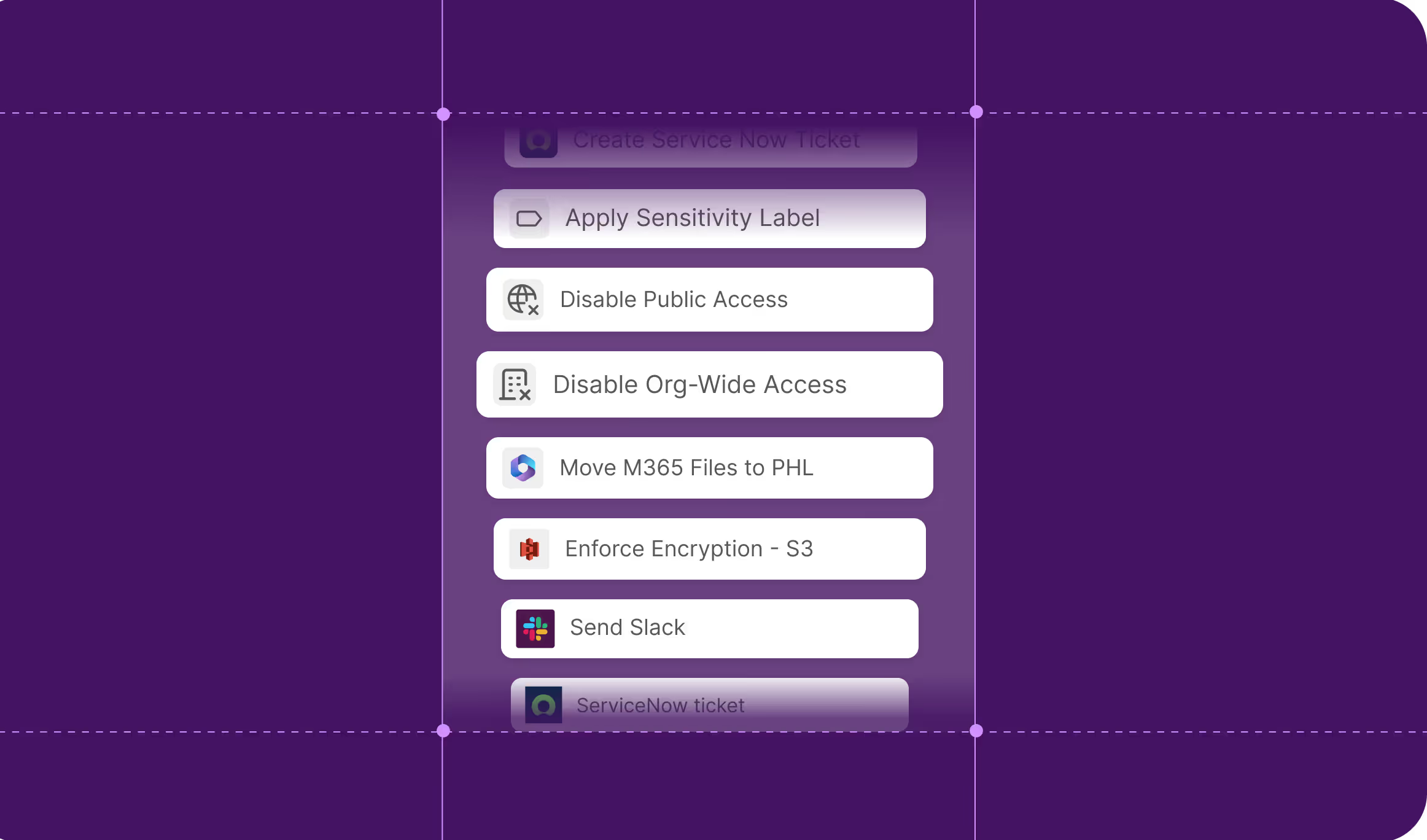

Auto-remediate risks quickly and at scale

Resolve data and AI risks using predefined rules that trigger native actions or automation workflows teams already rely on in platforms such as Tines and Torq.

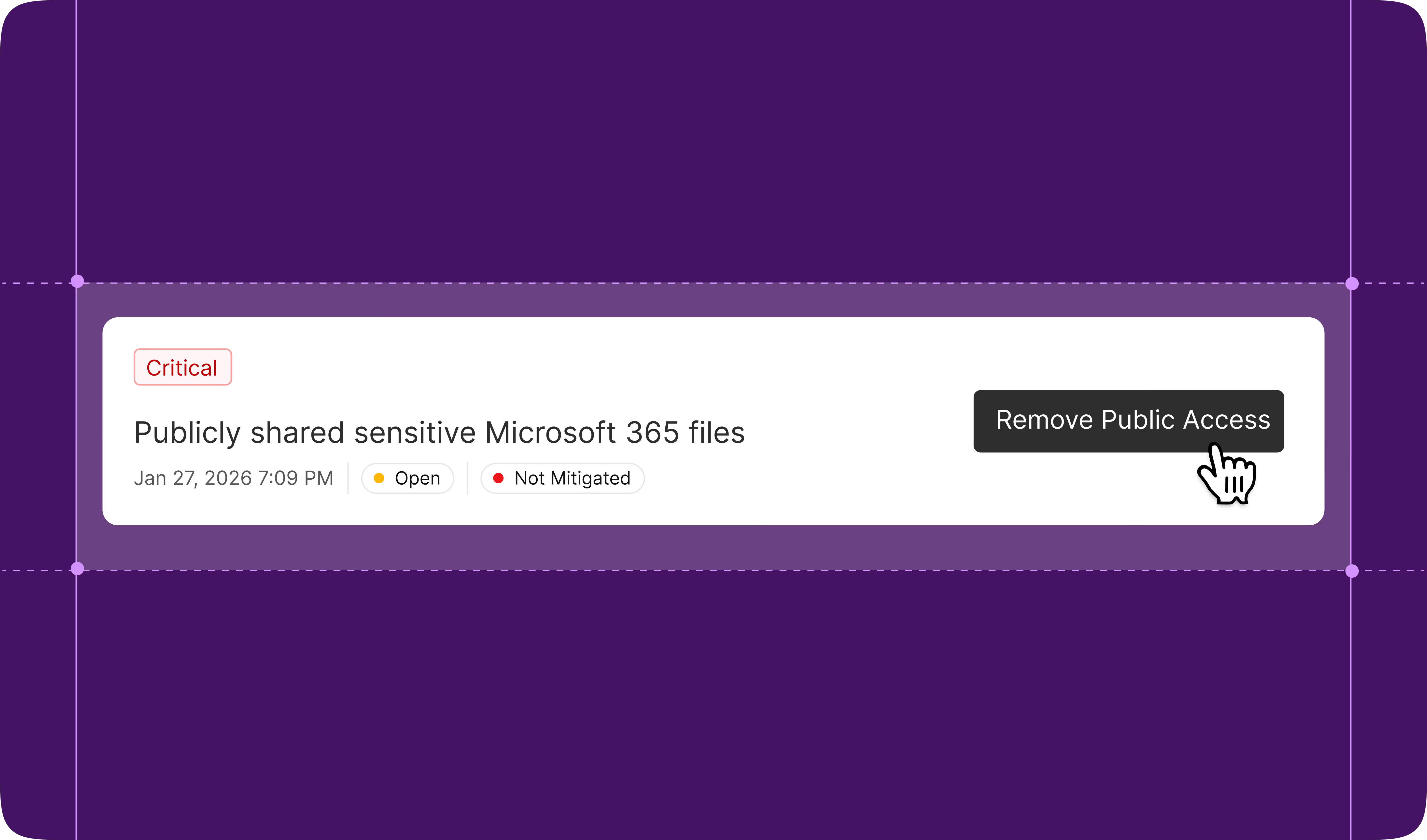

Take informed actions with one click

Fix data security issues safely across environments with built-in guardrails such as previews, blast-radius insights, and audit trails.

Turn business users into data security steward

Democratize data security by routing high-risk data exposures to data owners via custom notifications and a dedicated portal.

.avif)

Prove compliance with ease

Instantly generate auditor-ready evidence from continuously classified data and access trails, mapped to regulatory controls across cloud, SaaS, and on-prem data stores.

Customer stories

See why security & data leaders love Cyera

Implementing the Cyera data governance solution has significantly improved our visibility and control over sensitive data, helping us address compliance gaps, reduce data sprawl, and enforce consistent security policies across the organization.

Sean Mullins

CISO, Cass Information Systems

“The big piece that Cyera is doing is that as we understand our environment and the data within it, it's helping us make those data-driven decisions, and by having Cyera help us classify and inventory that, it's been a huge win”

Corey Kaemming

CISO, Valvoline

“We saw the value within the first week. We were able to see sensitive records, delete things that didn’t need to be there, identify the riskiest things that we needed to focus on first. The value realization was very rapid.”

"Here is the reality, everytime I go into that dashboard I am wowed. We've identified billions of sensitive records, but we've scanned through trillions. The scale is unfathomable."

Pete Chronis

Former CISO, Paramount

"The amount of information that Cyera gives me is almost immeasurable, comparable to the older tools that I used to use on prem. In cloud environments I hadn’t found anything that came close. The insight that my team gets and the business gets is something I can’t replicate."

Robert Preta

Director of Cyber Security, ACV